News Story

Adaptive Hypermedia 2004, Eindhoven

Peter Scott, Monday 30 Aug 2004

The Adaptive Hypermedia 2004 conference ran in Eindhoven in the Netherlands this year (August 23-26, 2004). I was there because the conference had some dedicated Prolearn sessions this year, and hosted a meeting of the executive board. There were a number of very good papers “with a strong showing from the Irish” Trinity especially. My personally recommended papers (apart from the Prolearn and Irish ones, of course 😉 were in the session that I chaired. Rogerio Rodrigues and team, who showed a very neat “talking head” media formatting system. Keith Vander Linden discussed a generic “agent” architecture for contextualized information retrieval, called Myriad.

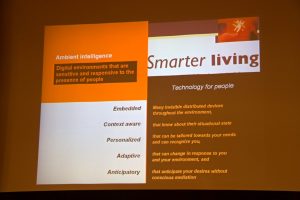

The opening keynote was from Emile Aarts, who presented a seductive Phillips Research vision of an adaptive and ubiquitous future. For Emile, the best model for seamless ambient intelligence is the Roald Dahl character “Matilda”. She just points, and what she wants to happen, just happens so Emile’s talk followed Matilda growing up. He was also pretty keen on the film “Minority report” which he showed a few clips from pointing out that it is to the movies we need to go (rather than corporate promos) for our more realistic “future tech scenarios”.

He insists that the “hardware side’ is ready but the “soft side” is lagging behind! He listed 11 key key technologies that are in place now to support an ambient revolution. From polyLED displays, DVR-Blue, solid-state incandescent LED, RFID tagging, silicon-everywhere, wearables, etc…

Emile had a nice example of wearable health monitor clothing for those at risk of cardiac arrest. Evidently, you may have only a minute or so to tell someone that you are having a heart attack, but then, say, 15 minutes when their help could save you. Emile says that if you clothing is monitoring you then it should have an opportunity to “speak for you” and call for help! He also presented a nice little study of “emotional computing” where the Phillips folks have been working on giving a large range of emotional responses to a little dog avatar. Evidently they have found that users are much more accepting of a little dog than any sort of human-like intelligence. When things (inevitably) go wrong, people seem happier to attribute the mistake to “the dog”, rather than to themselves or the machine.

Candy Sidner (from the MERL labs @ Mitsubishi) did an interesting keynote based mainly around their collaborative agent system COLLAGEN, being a generic COLLaborative architecture for AGENts. The COLLAGEN architecture is founded upon her classical work with Barbara Grosz and others on intentions and shared discourse. (Indeed it looked to me as if classical AI research is alive and well in the Mitsubishi research labs no, really, I think that is a good thing!)

The java implementation has a “focus stack” to track what the agent is currently working on and a plan or “purpose tree” to manage mutual agent-user intentions. She demoed a simple system for talking to your VCR to record programmes using a simple syntax where the agent understood that some requests had a number of steps. The collagen stack helps it to manage which tasks to do when, handling the plan sequencing constraints etc… She then showed a promo for the Mitsubishi Electric DiamondHelp system. The newest ME devices now contain a generic programming ability from remote stations (pc, ME television etc) such that you talk to the agent on the pc and it then downloads instructions to the device over the domestic electrical wiring (x10 like). Essentially the tricky button interfaces on devices, such as washing machines, vcr, dvd, air-conditioners, heating thermostats are run by a text chat with the user. All the “structured histories” of what you say to devices and ask them to do are recorded and exposed to users.

She finished with a discussion of real human engagement with physical robots. Real people attend to many things at the same time, and speakers use “back channels” to manage the interaction (eg. Pausing or dropping intonation to elict yes, I am still listening feedback). In the MERL labs, Mel is a small robotic “penguin” device that works on this (has a camera, mics, and moving parts flapping wings). Mel “manages” the interaction (rather than the usual passive and reactive robotics). Nice to see the robot maintaining simple proxemic cues in an interaction: it looks at objects that it talks to the user about, and uses face tracking to check that the user has looked at them also. It also (nicely) uses face tracking to “look” at the users appropriately during the conversation. It otherwise has a pretty limited conversational repertoire!

They are currently working on making Mel mobile and have stereoscopic vision (he really needs to understand “nodding”!)

Related Links:

Connected

Latest News

Three KMi researchers were recognised as top scientists in Computer Science at the OU

KMi’s Generative AI mini-Scotland Tour

AI for the Research Ecosystem workshop #AI4RE

KMi congratulates Dr. Joseph Kwarteng for successfully defending his doctoral thesis